The uncomfortable truth about AI today Episode 1: Most AI companies today do not build AI.

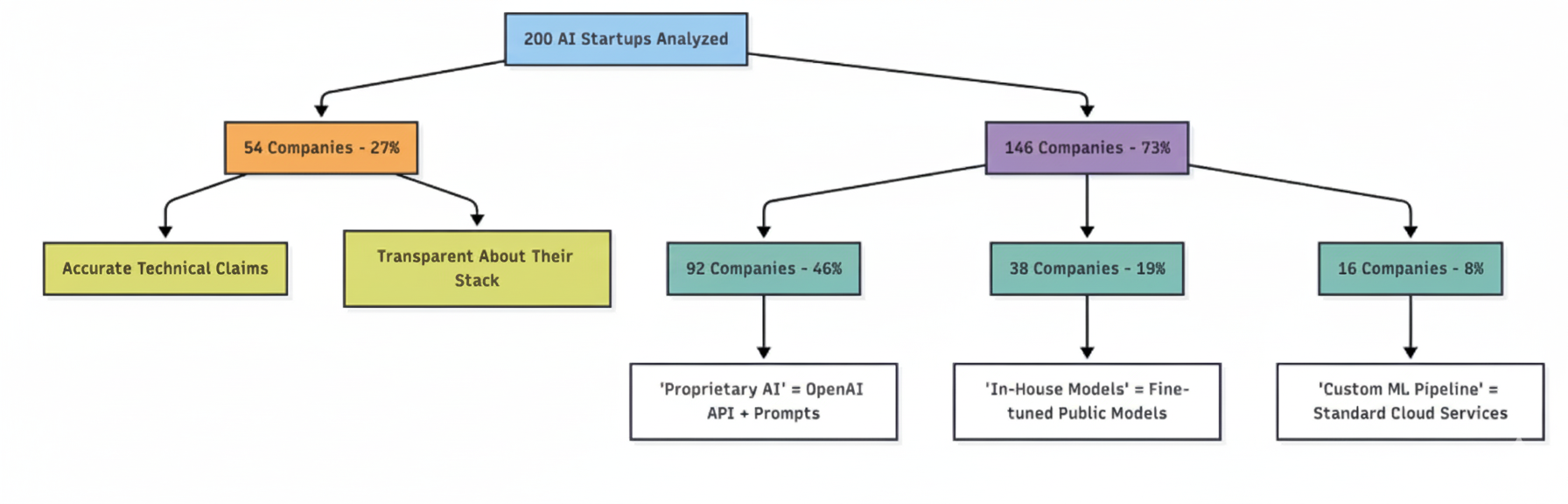

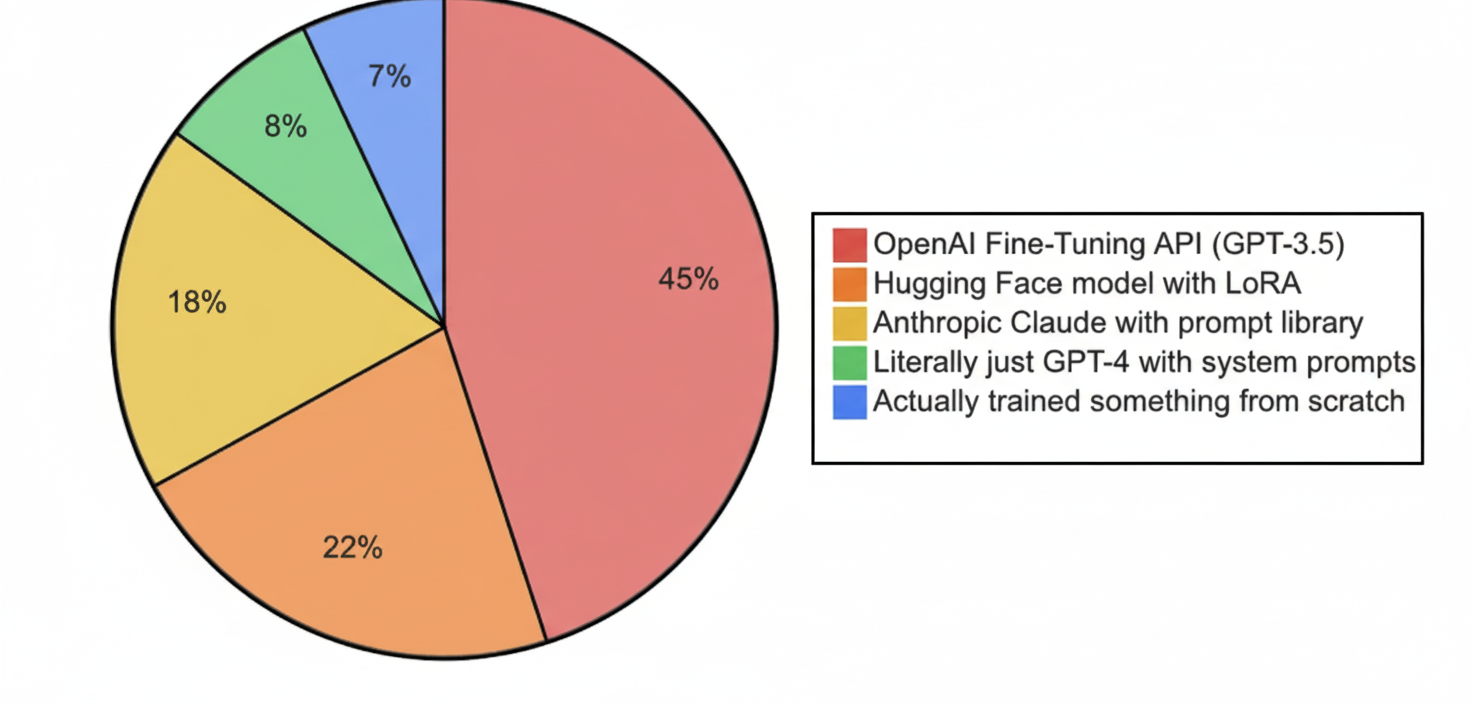

A recent investigation of 200 funded AI startups found that 73 percent of them were running third-party APIs such as OpenAI or Anthropic under the hood, while marketing themselves as proprietary AI.

This is not a small miscommunication. It is a deep gap between what the market assumes and what is actually being built.

Prefer watching instead of reading? We got you!

AI washing is now a pattern

Researchers now use a term for this: AI washing.It describes the practice of claiming AI capabilities that are not real or are at best exaggerated.Academic literature confirms this pattern as a form of deceptive communication, driven by hype cycles and investor expectations.

This aligns with what was found in the 200-company study: founders feel pressure to say they built “proprietary models” even when all they did was connect the OpenAI API and write a system prompt.

Most value claims are simple wrappers

The investigation into those 200 companies saw the same pattern repeat:

- companies claim “proprietary models”

- outbound requests go to api.openai.com

- tokens match GPT pricing patterns

- responses match OpenAI latency fingerprints

- no model training or novel architecture found

Plain wrappers. With a new UI. And a new narrative.

The economics are simple. Calling an OpenAI model might cost roughly three cents per request. Some startups charge $2 to $5 per request. This creates 50x to 150x markup.

This is not limited to startups

Large firms also misstate AI capabilities. Apple has already been sued for misleading claims about AI features in devices and software.Two registered U.S. investment advisers, Delphia and Global Predictions, settled with the SEC for penalties after claiming AI-driven investment intelligence without real AI behind it.

And one of the most aggressive examples was Builder AI. The company marketed an “AI that builds apps.” Investigations found much of the actual work was done manually by human developers in India. The company later collapsed after reaching unicorn valuation.

AI washing is not theoretical. It has financial, regulatory, and operational consequence.

The innovation that matters is not the model

The real value in most AI companies is in:

- workflow design

- domain logic

- data pipelines

- software integration

- human-in-the-loop quality control

Not novel transformers. Not custom embeddings. Not training runs. That is why the honest version of the story is simple:

Most AI startups are software companies using foundation models as infrastructure

This is not bad. This is normal. Every mobile app was a wrapper over iOS APIs. No one complained.

The problem is deception.

Why is this happening

There are several structural reasons why AI washing has become widespread:

- Most people don’t truly understand AI: The field is highly complex. Few investors, journalists, or executives have deep knowledge of machine learning, neural network architectures, or training pipelines. This knowledge gap makes it easy to claim expertise without real substance.

- Investor pressure and hype cycles: The market rewards “AI-first” branding, not honesty about software integration. Founders feel compelled to exaggerate technical sophistication to secure funding.

- High cost of building models from scratch: Training large models requires massive compute, data, and expertise. Using existing APIs is far cheaper and faster.

- Low barrier to wrapping foundation models: With powerful APIs available, any team can create a product that looks AI-driven without inventing anything new.

- Marketing incentives outweigh engineering incentives: Media coverage and press often highlight “proprietary AI” rather than the underlying infrastructure or workflow innovation.

How to evaluate claims

There is a method to test any AI product’s claim:

- ask which model they run

- ask if inference is hosted internally or via API

- ask if they fine-tune or train from scratch

- ask for evidence of training infrastructure

If the answers are vague, assume it is a wrapper.

Why this matters

False claims destroy trust. Investors waste capital. Customers waste time. Engineers waste careers in companies that are “just a UI” over someone else’s model.

Conclusion

AI is real.The progress is real.The models are powerful.The future impact will be large.

However, the current market is flooded with companies that claim to have invented new AI, while actually selling repackaged GPT-style calls with a markup and a brand narrative.

The industry needs honesty.

Data scientists are in extremely short supply on the market. No one expects you to build an AI in a week.

Using GPT-4 or Claude as the core model is not a weakness.

Lying about it is.